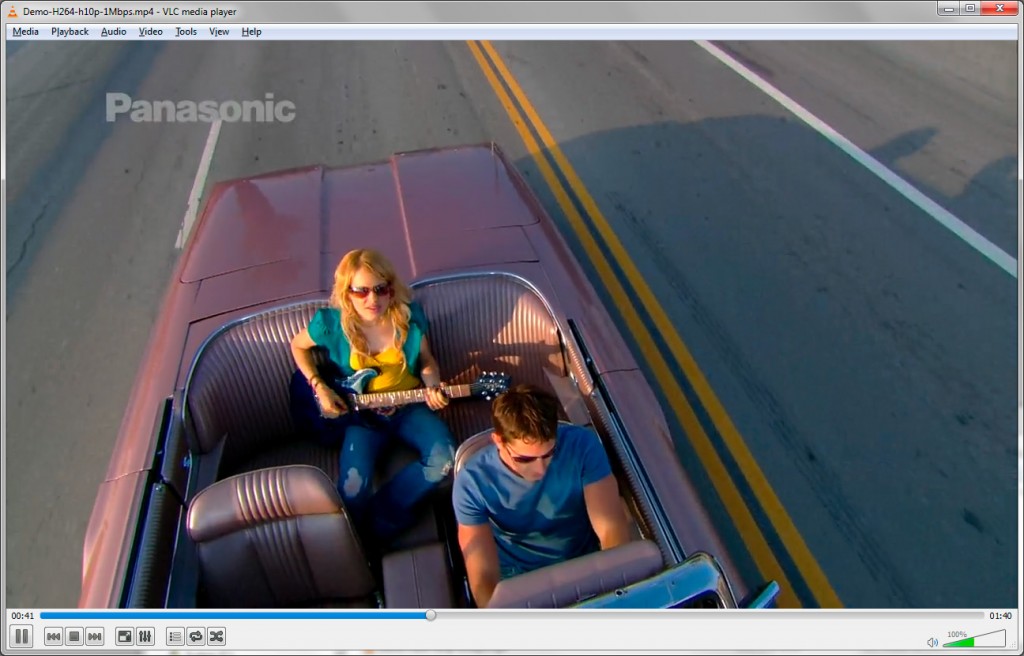

MediaCoder has supported H.264 High 10 Profile (10-bit color depth) encoding since quite a long time ago. However, due to the lack of good and free decoder and player for this profile, I haven’t seriously test with it until today. I encoded a 720p video clip in Hi10P at 1Mbps (really low for HD content) and played the encoded content with VLC 2.0.1. The result is quite impressive.

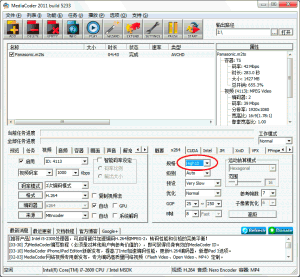

MediaCoder configured for Hi10P encoding

MediaCoder configured for Hi10P encoding

1Mbps 720p H.264 Hi10P Playback

4:2:2 10-bit is already the de-facto standard for professional video because it is the way it is captured and transmitted over SDI. The entire production chain (film scan, video edition, archiving etc.) uses at least 10-bit signals. But when it comes to broadcast contribution, encoders and decoders are still limited to 8-bit, usually with 4:2:0 chroma sub-sampling, just as with consumer video. The result is that when transmitting video from one point to another, picture information can get lost and quality can suffer.

Why does 10-bit save bandwidth (even when content is 8-bit)?

10-bit compression of a 10-bit source provides a better quality than using 8-bit compression

algorithms. The reason is simply because the scaling stages needed before encoding and after

decoding much are less efficient than the MPEG compression algorithm. In other words, it is better

to compress than just scale video components to reduce the amount of transmitted information.

Thus 10-bit compression of 10-bit sources actually saves bandwidth. The other advantage is that it is

possible to transmit details that would have been destroyed by the scaling stages, fine gradients for

instance.

What does “saving bandwidth” mean?

Bandwidth is saved if less bit-rate is needed, keeping the same video quality. When comparing encoders or encoding technologies, both bit-rate and video quality has to be considered: less bit-rate keeping the same quality is equivalent to more quality keeping the same bit-rateWhat is a better video quality?

The video quality is better when the decoded video is closer to the source. The most common way of expressing “closeness” in video processing is stating that there are less errors relative to the pixel bit-depth. For example, a 1 error bit on a 8-bit signal provide the same relative error than 3 bits of error in a 10-bit signal: 7 bits only are actually meaningful in both cases.

Where are errors introduced in a MPEG encoder?

Video is compressed with a lossy process that introduce errors relative to the source: the quantization process. Since those errors are relative, they do not depend of the source pixel bit-depth; but only on encoding tools efficiency when a given bit-rate has to be achieved.

What happens with 10-bit video source when using a “perfect” MPEG encoder?

A 10-bit source carries about 20% more information than an 8-bit one. Assuming the same encoding tools efficiency, the same bit-rate can be achieved just by quantizing 4 times more (2 bits). Since the relative error is the same in both cases, the video quality should not change.

So why does a AVC/H.264 10-bit encoder perform better than 8-bit?

When encoding with the 10-bit tool, the compression process is performed with at least 10-bit accuracy compared to only 8-bit otherwise. So there is less truncation errors, especially in the motion compensation stage, increasing the efficiency of compression tools. As a consequence, there is less need to quantize to achieve a given bit-rate.

The net result is a better quality for the same bit-rate or conversely less bit-rate for the same quality: between 5% and 20% on typical sources.